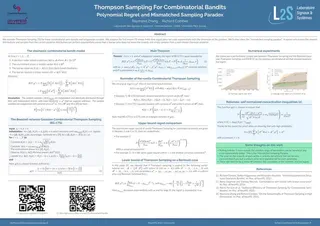

Thompson Sampling For Combinatorial Bandits: Polynomial Regret and Mismatched Sampling Paradox

Abstract

We consider linear stochastic bandits where the set of actions is an

ellipsoid. We provide the first known minimax optimal algorithm for this

problem. We first derive a novel information-theoretic lower bound on the

regret of any algorithm, which must be at least

$\Omega(\min(d \sigma \sqrt{T} + d \|\theta\|_{A}, \|\theta\|_{A} T))$ where

$d$ is the dimension, $T$ the time horizon, $\sigma^2$ the noise variance, $A$

a matrix defining the set of actions and $\theta$ the vector of unknown

parameters. We then provide an algorithm whose regret matches this bound to a

multiplicative universal constant. The algorithm is non-classical in the

sense that it is not optimistic, and it is not a sampling algorithm. The main

idea is to combine a novel sequential procedure to estimate $\|\theta\|_A$,

followed by an explore-and-commit strategy informed by this estimate. The

algorithm is highly computationally efficient, and a run requires only time

$O(dT + d^2 \log(T/d) + d^3)$ and memory $O(d^2)$, in contrast with known

optimistic algorithms, which are not implementable in polynomial time. We go

beyond minimax optimality and show that our algorithm is locally

asymptotically minimax optimal, a much stronger notion of optimality. We

further provide numerical experiments to illustrate our theoretical findings.

The code to reproduce the experiments is available at

https://github.com/RaymZhang/LinearBanditsEllipsoidsMinimaxCOLT.

Type

Publication

In The Thirty Eighth Annual Conference on Learning Theory 2025

Authors

PhD Student at CentraleSupélec

I was a PhD Student at the L2S lab at CentraleSupelec under the supervision of

Richard Combes and

Sheng Yang